Gradient-based Class Activation Mapping (Grad-CAM)

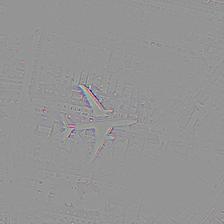

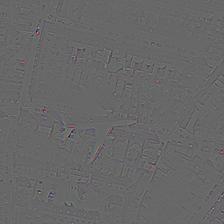

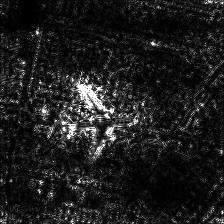

In this project, I implemented Grad-CAM on UC-MERCED dataset which is a satellite image dataset. Here in this test image, we can see that airplane and city in the background is there. Using Grad-CAM, we checked the features responsible for predicting the image as of class Airplane as well as DenseResidential. In the second image, we are highlighting the features(parts) responsible for considering it as Airplane. Here you can see the upper half highlighted portion of the airplane which is there and the background densed city is blurred out. In the third image, we are highlighing the class DenseResidential on which we can see the blurred airplane and highlighted city in the background. The last row contains the guided grad cam outputs.

More about GRAD-CAM

It is a technique for making Convolutional Neural Network (CNN)-based models more transparent by visualizing the regions of input that are “important” for predictions from these models, or visual explanations. It uses the class-specific gradient information flowing into the final convolutional layer of a CNN to produce a coarse localization map of the important regions in the image. Grad-CAM is a strict generalization of the Class Activation Mapping. Unlike CAM, Grad-CAM requires no re-training and is broadly applicable to any CNN-based architectures. Grad-CAM may be combined with existing pixel-space visualizations to create a high-resolution class-discriminative visualization (Guided Grad-CAM).